Interfaces, Integration, and Interoperability for AI Tools

The Service-Meister team, on creating templates and all necessary blueprints to realize the vision of Service 4.0.

© NanoStockk | istockphoto.com

The targeted use of artificial intelligence (AI) in companies can increasingly be understood as a game changer with which massive added value will be created in the coming years. This impression is also strongly reflected in current surveys, in which for the German economy alone amounts of approx. 40 billion Euro within five years are assumed, i.e. approx. 8 billion Euro per year, are to be created by AI as added value. Therefore, the use of AI in business applications is increasing more and more, and this concerns both the scope and the type of possible fields of application.

This development and the need to participate in it is the central issue for German SMEs in particular: shortages in apprenticeship places and the lack of skilled workers, the increase in international competition, and new types of business models can all be mentioned here as areas that require urgent attention and could be resolved for German SMEs through the use of AI. The fact that especially the smaller and medium-sized representatives of SMEs usually do not have a development or digitalization department, or only have a small one, condenses this circumstance into a very concrete question: How should SMEs get started with AI solutions in this situation?

The Service-Meister research project, which started in January 2020, is working for the duration of three years to deliver new solutions from the field of AI tools for digital service, templatization of AI approaches. and a platform promise for SMEs (www.servicemeister.org), has dedicated itself to answering this question.

To make this possible, initially a unified target image for technical service 4.0 was created based on the digitalization of industrial service. For this purpose, the service life cycle was developed, which represents a completely digital encapsulation of technical service. Here, the individual steps of each service case are

mapped onto six consecutive stages.

Fig. 1 Service Life Cycle for Service 4.0 Segments of a cycle, which together represent nothing less than the complete processing of service cases for Service 4.0.

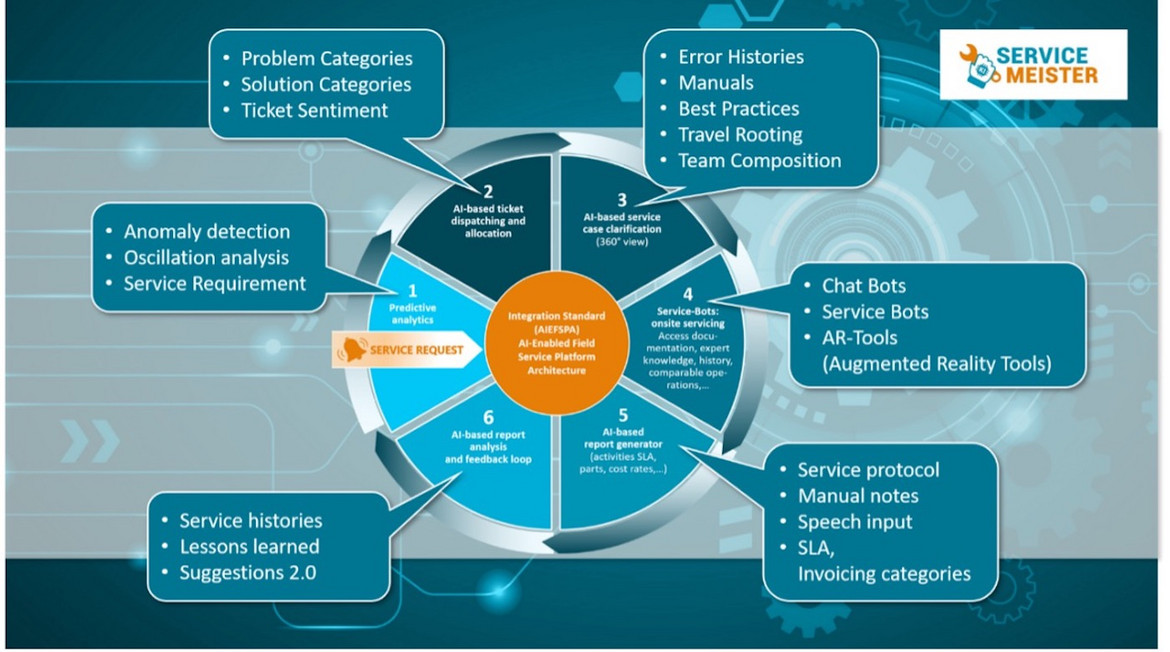

This service life cycle (SLC) is divided into six areas in which the respective tasks per segment are realized by specific AI tools:

- The service request arrives, is recognized and is forwarded by an AI tool, and processed for predictive analytics.

- The incoming service tickets are prioritized according to their importance and assigned to the existing problem or solution categories.

- A 360° view, all necessary information on actualities and histories are compiled for the upcoming service call.

- This is where the actual service deployment takes place: now with massive support from AI-based support tools.

- Service reports and their bases are automatically generated.

- The service reports are then analyzed to extrapolate best practices.

For all this to work, all AI tools used must follow a central integration standard, identified here in the middle of the SLC as AI-Enabled Field Service Platform Architecture (AIEFSPA), which we will explain later.

Developing the SLC and, more importantly, the associated AI tools and agreeing on a common, technical working basis is the central task of the Service-Meister project. This technical working basis can be summarized as a templatization that provides all the necessary blueprints to make Service 4.0 operable. We explain the components required for this in the following.

1. Generic Building Blocks Layout

An essential requirement for the templatization of the entire SLC within the Service-Meister project is the creation of coherent Building Blocks. In software production, this refers to blocks of structurally similar code that have standardized routines and allow a high degree of reusability of the software modules used (see Jopp et al., Building Blocks for Technical Service 4.0).

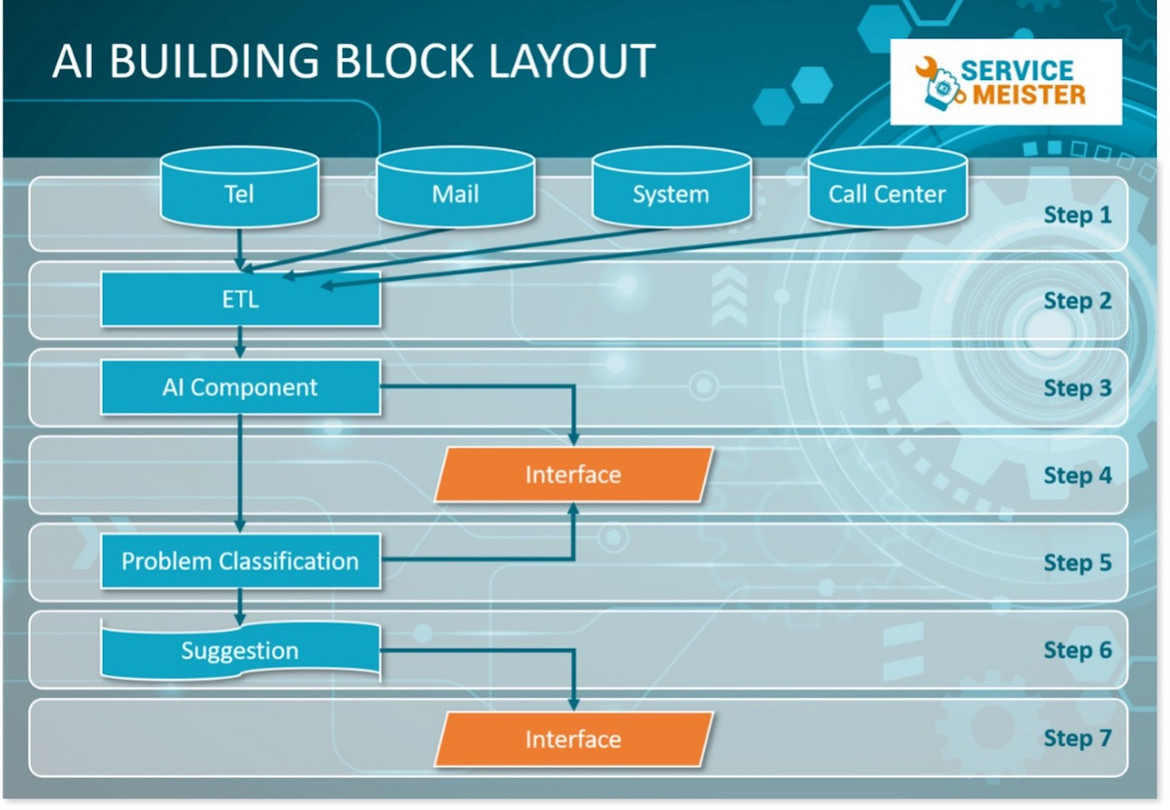

The basis for this is provided by the processes in Fig. 2, which is a generic layout for the Building Blocks developed in Service-Meister. In the first step, a message is received, e. g., for the service requirement; this can originate from various data and information sources (see Step 1: Tel, Mail, etc.). In a subsequent step, this data basis is pre-processed and processed using the methods of Extract-Transform-Load (ETL, see Fig. 2). This is followed by a workflow step which is referred to in the figure as the AI component. This is where the individual added value lies for each segment via the respective AI-supported tool, which can be adapted in each case. A subsequent interface then allows the user to read and evaluate the resulting problem or solution categories, or more generally, the resulting suggestions of the AI tools.

Fig. 2 Generic scheme for the SLC Building Block

This is the general flowchart for the Building Blocks. As mentioned, the respective modification takes place in the process step of the AI components, although, of course, the other process steps can also each undergo adjustments if necessary.

Fig. 3 Specific content of the AI components that can be used to implement the content of the six different segments of the SLC

As an example, the content of two specific AI components is explained below to illustrate the general working principle. In the second segment, the ticket dispatcher, AI methods from Natural Language Processing (NLP) are used to analyze text content from the respective service ticket, which is used to determine the problem category, the solution category, and the sentiment of the ticket. In the subsequent third segment, all the necessary information is compiled that is required to be able to carry out the subsequent service case. Here, too, NLP methods are used to analyze text content, which can consist of error histories, manuals, and best practices, for example. At the end of this segment, solution containers are created here that provide all the necessary information to perform the service operation. Furthermore, service routes, as well as an optimal set of necessary skills for the service team, can be compiled here by the AI tools.

Basically, the respective code elements of the AI components are written in Python, and the necessary libraries, such as pandas, scikit-learn, and NLTK for NLP routines, are integrated here.

2. Interfaces and Interfaces

Certainly, the respective digital maturity of the institution is a critical point in the introduction of new software systems, and this can be decisive for the long-term success or withdrawal after an unsuccessful PoC. In addition to user acceptance of the new software to be introduced, another critical point in a software introduction project is the existence of coordinated standardized interfaces. If these do not exist, a great deal of resources must be invested in individualized solutions to the interface problem. An example of this are complications arising from legacy systems, such as those that exist within any corporate zoo of software products, which the respective institution usually has to invest many hours in resolving.

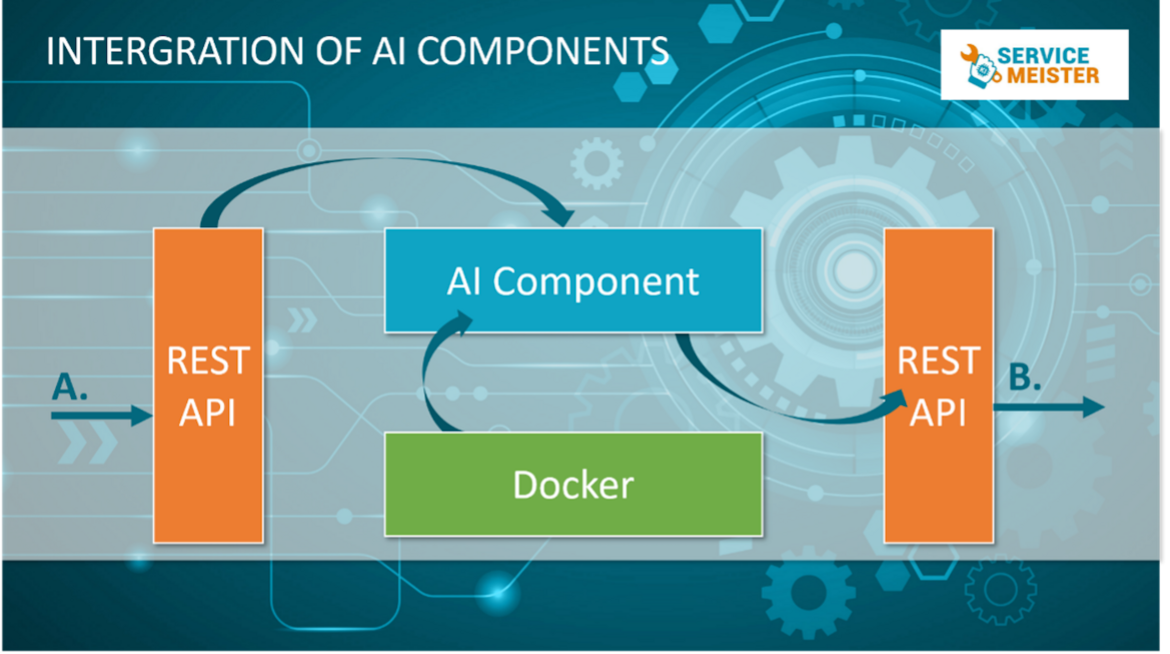

A central approach to templating the AI tools for the SLC in the Service-Meister project is, therefore, to set up generalized interfaces with which the respective AI products can be easily connected. The use of application programming interfaces (APIs) based on the representational state transfer (REST) approach for the architecture of distributed systems, such as web services, is suitable for this purpose.

Fig 4 Generalized interface structure for the integration of AI components

The basic structure of the interfaces as a black box system can be seen in Figure 4. This basically consists of four parts. (1) An inbound REST API endpoint that is used to pass the incoming and pre-processed data from the ETL component to the specific (2) AI component. (3) An outbound REST API endpoint that can pass the processed suggestions from the AI component to the output windows. The REST API endpoint is usually documented using OPEN.API.JSON, which means that all requests and queries are implemented and documented in JSON format.

This is particularly convenient if containerization, i.e., local variable virtualization such as Docker instances, is implemented here; this brings the applications to the server. (4) A Docker container contains an application and all resources necessary for runtime. Thus, the principle of Dockerization can yield particularly well in cluster environments and partially distributed systems.

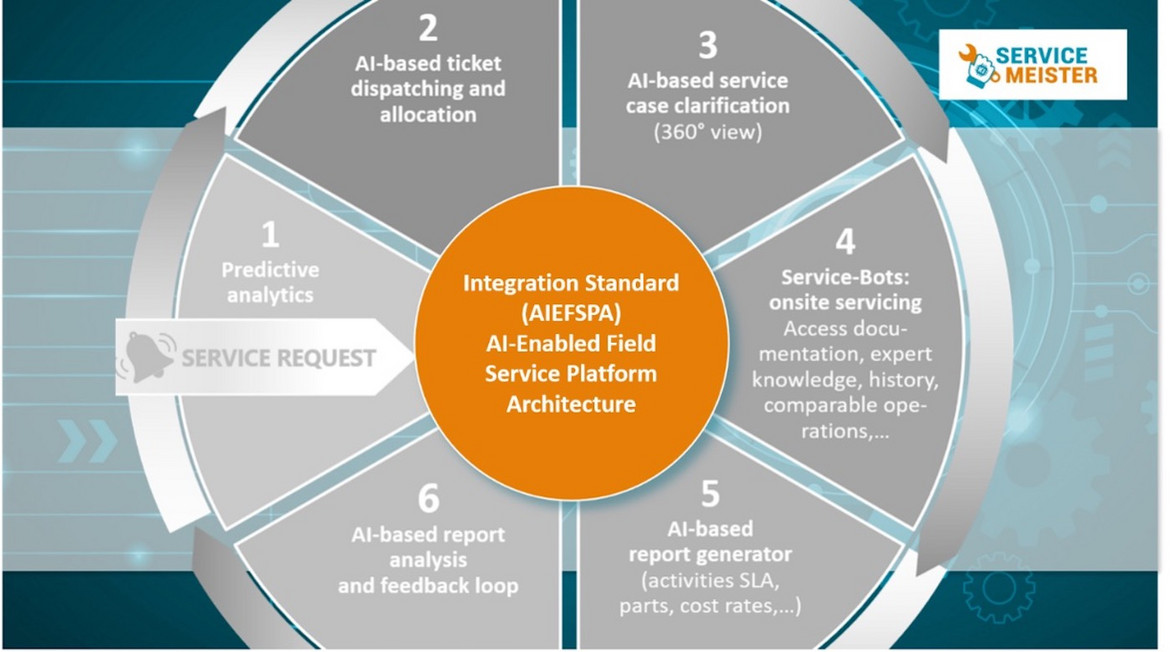

4. AIESPA AI-Enabled Field Service Platform Architecture

Another important aspect is that we need a standard that can ultimately perform secure integration and coordination with the possibly distributed system involved when individual AI-based tools and components interact. In the area of machine communications, a good example of this approach is the

Open Platform Communications Unified Architecture (OPC UA). OPC UA represents a platform-independent standard for data exchange based on a service-oriented architecture (SOA).

Fig. 5 Integration Standard (AIEFSPA) AI-Enabled Field Service Platform Architecture

This allows machine data to be described comprehensibly and transported securely. We would like to develop such a standard for the AI service apps and make it available in further steps. Although we will work around some issues with the above interaction of REST API endpoints and the potential virtualization of applications on the server and cluster environments, we will document requests or queries to and from interfaces, possibly using the OPEN.JSON standard. The developments for this are still ongoing; the working name of this format is AI-Enabled Field Service Platform Architecture (AIEFSPA) – for which we will be providing information in time for fall 2021.

5. Interoperability as Outlook

Through the use of generalized Building Blocks for AI-relate software development and the use and development of open interfaces for the service API, we are creating the prerequisite so that, in the future, the AI tools can be combined and used with each other as desired via plug-and-play using the Service-Meister platform. This is one of the central prerequisites for the integration of third-party providers on the platform.

This brings us a significant step closer to our goal of complete and scalable integration of service knowledge for Service 4.0 for SMEs. Furthermore, we are thus reducing the resource-intensive trap of vendor lock-in, where individualized addresses and modifications of interfaces, in particular, pose a problem. We are confident that this will enable specialists to process more complex tasks more easily.

For this purpose, Service-Meister will provide the previously explained AI building blocks, interfaces, and communication for a service API approach in open formats to the SME sector. We see this as the foundation and down-payment for a sustainable and successful Service-Meister platform that we will offer to the SME sector in 2022.

Dr. Fred Jopp has more than 20 years of experience in Big Data analytics and algorithm development in Applied Physics and Economics. He is Head of Business Solutions Public Sector and International Project Management at PASS IT-Consulting Dipl.-Inf. G. Rienecker GmbH & Co. KG.

Christine Neubauer is Project Manager for AI and Industry 4.0 at eco – Association of the Internet Industry. Currently, she is working on the Service-Meister project, an AI-based Service Ecosystem for Technical Service in the Age of Industry 4.0. She is part of the project coordination team, with distributed tasks such as planning, steering, agile actions, reactions, internal and external communication, and stakeholder management.

Hauke Timmermann is Consultant for Digital Business Models and is the Project Lead for the Service-Meister project at the eco Association.

Andreas Weiss is Head of Digital Business Models at eco - Association of the Internet Industry. He started with eco in 1998 with the Competence Group E-Commerce and Logistics, moving afterwards to E-Business. Since 2010, he has been leading the eco Cloud Initiative as Director of EuroCloud Deutschland_eco and is engaged in several projects and initiatives for the use of artificial intelligence, data privacy, GDPR conformity, and overall security and compliance of digital services.

Please note: The opinions expressed in Industry Insights published by dotmagazine are the author’s own and do not reflect the view of the publisher, eco – Association of the Internet Industry.