Artificial Intelligence in Support of Humans – Transparency, Trust, & Decision-Making

Prof. Norbert Pohlmann, on the need for transparency in algorithms, and keeping the human being in the decision-making loop in the development of AI systems.

© francescoch | istockphoto.com

A version of this article was first published in German in the compendium “Digitale Ethik – Vertrauen in die digitale Welt” (forthcoming in English) in 2019 by eco – Association of the Internet Industry

The fundamental prerequisite for acceptance of artificial intelligence is that it can gain society’s trust. In order to develop trust, a transparent approach to AI is imperative.

Such an approach includes imparting basic knowledge about how AI systems work and their methods – this must become part of the general public educational mandate. The basic components for building trust are transparency in terms of which data is generated and used, where the AI is used, and how the AI works.

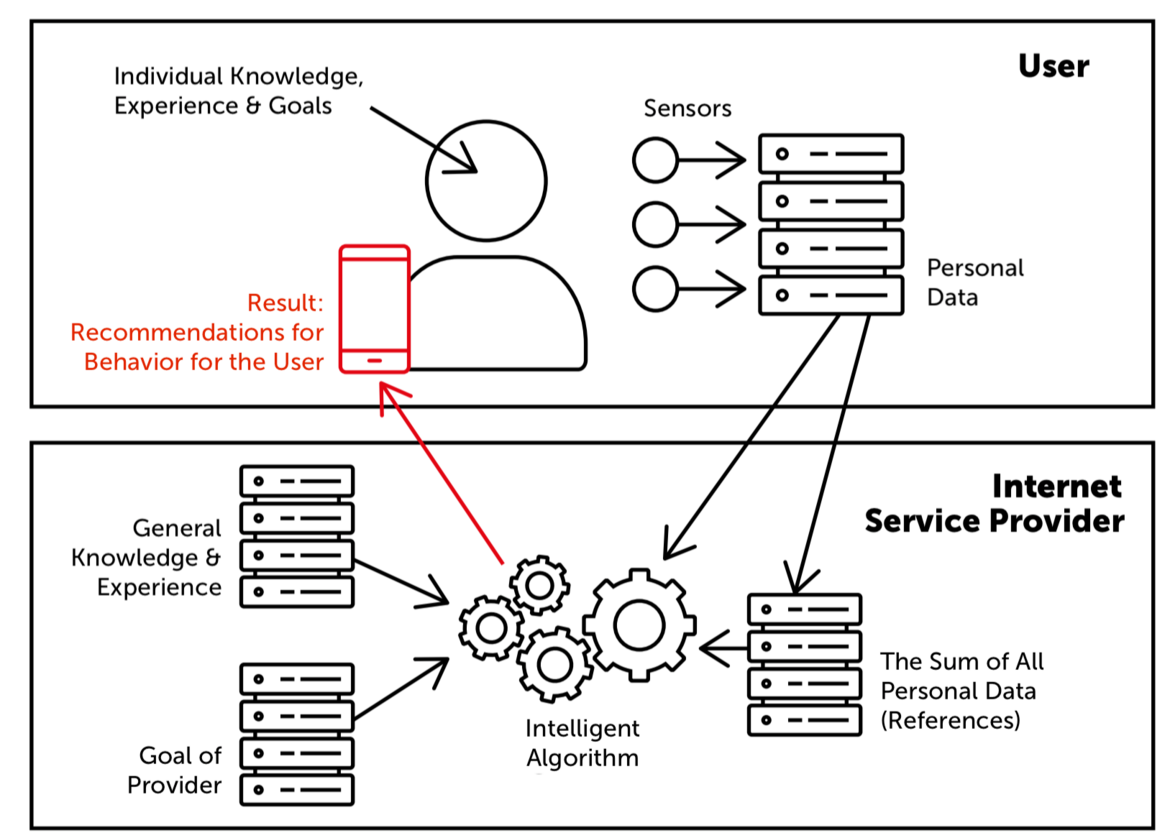

Internet services propose actions for users based on different types of sensors, such as wearables, smartphones, Internet services, etc. Intelligent algorithms use this huge amount of private sensor data, evaluate it, compare it with private data from other people, and employ general knowledge and experience to generate recommendations for action for users (see Fig. 1).

This can be very useful when it comes to making good decisions.

Intelligent algorithms with copious amounts of data and almost unlimited computing power are an optimal complement to the individual human being with his or her personal knowledge, experience, and intuition.

Fig. 1: Recommendations for User Behavior on the Basis of Intelligent Algorithms, © eco – Association fo the Internet Industry

Algorithms and transparency

If the Internet services make this transparent, well-calculated recommendations for action are extremely helpful for arriving at an optimal decision. However, if the Internet services themselves earn money indirectly with such services, the calculated recommendation for action will be in the interest of the Internet service and its customers, rather than in the interest of the users. Every user will inevitably become a product. The problem here is that people can lose their self-determination. A modern society cannot want that.

I have also mentioned previously the risk of bias in algorithms. This can partly be counteracted through transparency, but what is also needed here is diversity. Companies developing algorithms and services based on AI need to put a greater focus on balancing the gender and cultural diversity of their development teams. However, it is also a question of which data is used to train an algorithm. The input data that document knowledge and experience also have an influence on the ensuing results, and therefore knowledge about what data were used is very relevant for the evaluation of the results. If the input data contains prejudices and discriminatory views, the intelligent algorithms will also produce corresponding results.

As Oliver Süme, Chair of the Board at the eco Association, has commented, “Trustworthy AI applications should be developed and used in such a way that they respect human autonomy, yet function securely, fairly, and transparently. With their developments, products, and services, digital companies are driving digital change and share responsibility for answering the associated societal questions that arise.”

For users of AI, it is important that the “Human in the Loop” model is drawn upon or, if this mechanism for regulation is not deployed, that an alternative is created for establishing the “rules of the game”. In maintaining the requisite high level of knowledge and attention in this area, important activities include permanent awareness raising and information campaigns, employee training in companies, and early learning of the right tools for dealing with digital technologies.

Decision-making power and artificial intelligence

But the human needs not only to be in the loop – the human needs to retain self-determination as we move forward with digital transformation. Lucia Falkenberg, CPO of the eco Association and Leader of the eco Competence Group New Work, emphasizes the need for AI to be kept under human decision-making control, based on the premise that digital technologies are only tools that people consciously use. As Henrick Oppermann from USU points out, the human will necessarily remain pivotal in the application of AI for the foreseeable future. Sebastian Kurowski, from Fraunhofer Institute for Industrial Engineers, argues that artificial intelligence is unable to make accountable decisions, because this would require an additional layer of conceptualization that only humans have in order to provide the reasoning. Therefore, while AI technology can provide excellent support and recommendations for action, the final decision on actions must remain with people.

Artificial intelligence is a key technology for digital transformation and as an economic driver for the coming decades. However, its economic potential must be kept in balance with the need for its application to be for the benefit of society as a whole. Without trust, it will not succeed. And only a transparent approach to AI can strengthen people’s trust in it to make it a success in the long term.

Norbert Pohlmann is a Member of the Board and Director of IT Security at eco – Association of the Internet Industry. He holds two positions at Westphalian University of Applied Sciences, Gelsenkirchen: Professor of Distributed Systems and Information Security in the field of IT, and Managing Director of the Institute for Internet Security. For five years, he was a member of the "Permanent Stakeholders' Group" of ENISA (European Network and Information Security Agency), the European Community's security agency (www.enisa.europa.eu).