The Next Generation of Optical Transceivers for AI

High-speed networking will shape the infrastructure of next-generation AI systems. Mustafa Keskin, Application Solutions Manager, Corning Optical Communications explores the latest advancements in optical transceiver technology, including the rise of 1.6T transceivers and the potential of co-packaged optics.

©Olemedia| istockphoto.com

Introduction

Monitoring the optical transceiver roadmap is quite helpful for understanding the type of fiber, the quantity needed, and the optical connectors that will be used in next-generation optical transceivers. This information allows me to predict the implications on structured cabling. When combined with insights into how next-generation Artificial Intelligence compute racks will evolve, I can better judge how many fibers per compute rack/network rack I should plan to deploy in new builds or refurbishments of existing infrastructure.

The Optical Fiber Communication Conference and Exhibition (OFC) at the beginning of the year and the European Conference on Optical Communication (ECOC) towards the end of the year are key ‘heartbeat’ events of the industry for those who want to understand existing and emerging trends in the optical communications field. Below are the key trends/highlights I collected from these events in 2024. I am highlighting these trends because I expect them to continue into 2025.

The rise of the 1.6T transceivers

Current 800G transceivers available in the market are using 100G/lane speeds. For an 800G transceiver, using 8 lanes – each lane requiring a pair of optical fibers for a full duplex to transmit and receive simultaneous communication – we need 16 fibers.

Most transceiver manufacturers offer the 800G-SR8 multimode transceiver as 2x400G-SR4 and the 800G-DR8 single-mode transceiver as 2x400G-DR4 combo packaging, which has 2xMTP (MPO) connectors on the front side instead of a single 1xMTP16 (MPO16) port.

Using combo ports means larger switch radix, enabling network architects to build larger fat-tree networks that support larger GPU clusters.

Emergence of early 1.6T transceiver designs

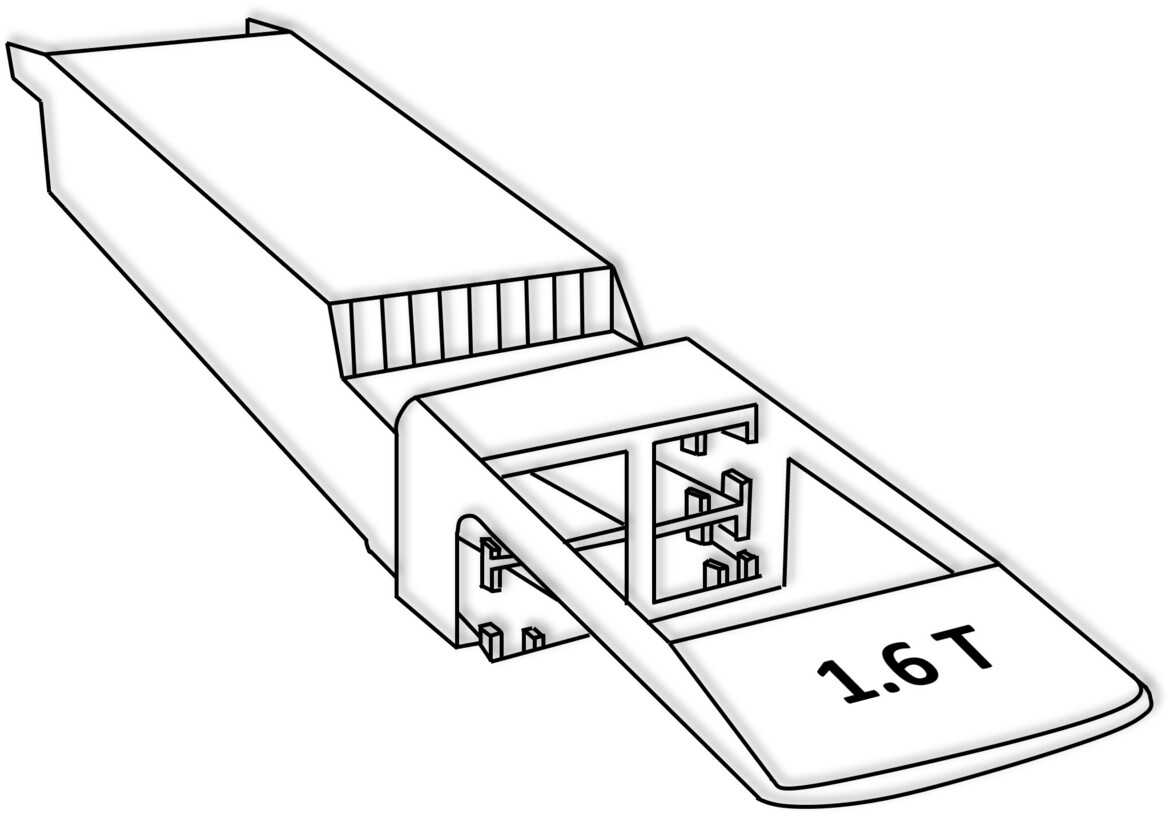

During my visits to exhibition halls at major industry conferences, I noticed that early commercially available 1.6Tb/s transceivers are all offered in SM packaging as 2x800G-DR4 (with dual MTP12 connectors) or 2x800G-FR4 (with dual LC connectors) in octal small form factor pluggable (OSFP) modules.

1.6T OSFP Module with Dual MTP12 Interface

1.6T OSFP Module with Dual LC Interface

Development on 200G/lane VCSELs, a key technology for high-speed data transmission used in multimode transceivers, was expected to be completed in 2025, with volume manufacturing to start in 2026. However, for now, the 1.6T speed show is dominated by SM transceivers.

Progress toward 400G/lane speeds and beyond

There were also talks about 400G/lane speeds at these events, and industry influencers predicted that solutions using 400G/lane speeds would take longer to design and qualify. The first 448G Pulse Amplitude Modulation with four levels (PAM4 SERDES), used for high-speed signal modulation, was expected to be available in 2027, with a ramp-up of manufacturing volume in 2028. This means that transceivers that can accommodate 400G/lane speeds will most probably be available towards the end of this decade.

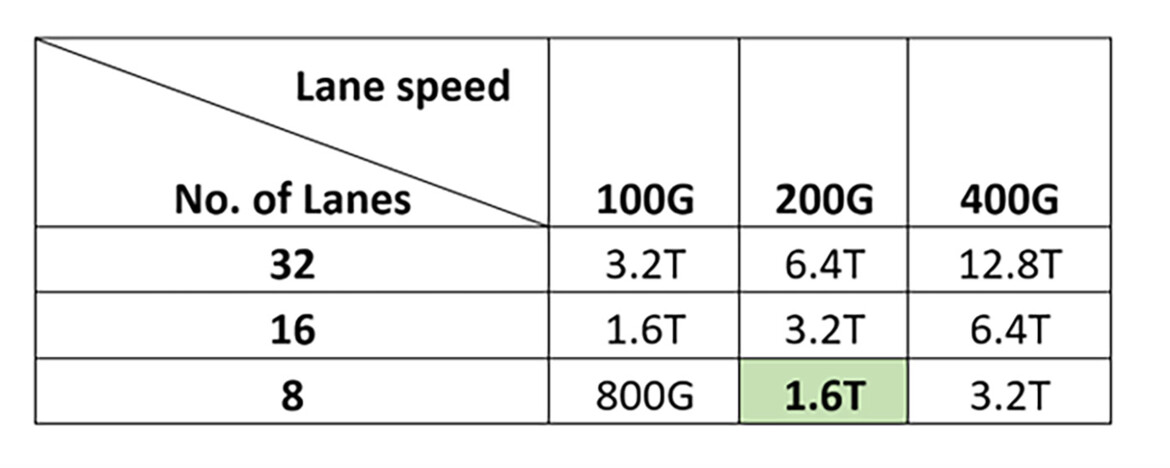

The industry consensus is that transceiver manufacturers will add more lanes only if it is not possible to increase the lane speed. If we can’t get the 400G/lane speeds on time, we can expect to double the lane count of the upcoming 200G/lane solutions and reach 3.2 terabits per second by using 2xMTP16 (one of the options) connectors on the transceiver package. As we are approaching 1.6T, the table below shows some of the possibilities in front of us.

Advancements in transceiver packaging

When it comes to packaging optical transceivers, there were two different competing schools of thought: linear-drive pluggable optics and co-packaged optics. Traditionally, pluggable optics used a digital signal processor (DSP) on both the transmit and receive sides inside the optical transceiver package, which required considerable power.

Co-packaged optics (CPO), with its close integration to the switch Application-Specific Integrated Circuits (ASICs), can utilize switch DSPs without needing an additional one, offering significant power savings over pluggable optics. With the introduction of the latest switch ASICs, pluggable optic manufacturers realized that they could also afford not to use DSPs on transceivers, hence joining the club of linear optics manufacturers but with the advantage of modularity, ease of testing, and serviceability.

It looks like the CPO team is on the verge of something. While no hyperscale customer has adopted CPO solutions yet, NVIDIA shared their roadmap for CPO switches during their GTC 2025 March conference, where they announced that the first CPO switch will be available as early as 2026. The work in the background by active equipment manufacturers is finally showing a clear roadmap for this most promising innovation to address the multiple needs of upcoming AI systems.

Linear Pluggable Optics => LPO

Co-Packaged Optics => CPO

The need for higher speeds: Driven by AI

One may ask the fair question, ‘We've just started to implement 400G systems, and you are talking about 1.6T and 3.2T systems. What is the rush, Mustafa?' It all boils down to the momentum created by generative AI, which is made possible by crunching more and more data, known as scaling law, using high-performance Graphics Processing Units (GPUs) working together in large clusters, known as AI factories. In 2020, the largest Large Language Model (LLM) used approximately half a billion parameters. GPT-4, released by OpenAI in 2024, is rumored to have had 1.6 trillion parameters.

The current industry consensus is that the models get smarter if they ingest more data; hence, there will be a need for more compute power, meaning clusters of hundreds of thousands of GPUs working at the highest speed possible is critical. Additionally, today’s LLMs are text-based systems that can mimic human-like responses in written language. Adding voice and video to the mix will exponentially increase the needed compute power to provide a seamless user experience with future AI-enabled solutions.

AI racks and fiber connectivity

A typical AI factory of the near future is expected to accommodate 100k GPUs and consume 300-400MW of power. The key building block of an AI factory is the compute rack, and the key benchmark for the latest high-performance AI racks is the NVIDIA NVL72 rack, which accommodates 72 GPUs and 36 CPUs (Central Processing Units).

If we consider a non-blocking network fabric for the back-end network, which is the GPU fabric, we will need 72x8=576 fibers if we use 800G-DR4 Single-Mode Fiber (SMF) transceivers. Using the same logic, the CPUs will need to connect to the front-end network and the storage-area network using 36x8=288 fibers. In total, 576+288=864 fibers will be needed per AI rack.

This first layer of spine-and-leaf fabric is connected using MM fiber today, but due to slower 200G/lane VCSEL development, we may see a fiber change as we start to see early 1.6T system builds.

Scaling fiber connectivity for next-generation AI rack

Industry leaders also expect that next-generation AI racks to host 128 XPUs – XPU being a more generic acronym that includes CPU, GPU, FPGA (Field-Programmable Gate Array), ASIC (Application-Specific Integrated Circuit) – with a 400+kW power load. This will require ((128GPU+64CPU) x8f (DR4 optics)) =1526 single-mode fibers per AI rack.

A 512 GPU pod consisting of 4 AI racks will require over 6000 fibers at the networking cabinet and a 100k GPU cluster will require 800x1526=1.2 million fiber strands between the leaf-spine level. We will need to double this for a two-tier spine-core architecture.

One way to bring these fiber counts to AI serve and network racks is to use the newly developed Tiny MT Ferrule Connector (TMT), known as an MMC connector that can terminate 3456 fibers using 216x MMC16 connector ports in 2U rack space. The server and network connectivity can then be done simply using MMC-to-MTP8/MPO8 breakout harnesses within these racks.

Future fiber connectivity solutions: The MMC connector

The MMC16 connector seems to be a good fit for supporting today's 400G, tomorrow's 800G, and future 1.6T speeds with its 16-fiber design and for supporting extremely high-dense fiber environments with potential future 32f per connector versions.

MMC connector (REN10876)

Ganged MMC adapters

Conclusion

Industry conferences offer valuable opportunities to learn from competitors, gain multiple perspectives, and extract key takeaways. Key trends identified include:

- Next-gen transceivers: 200G/lane transceivers are on the horizon, with single-mode optics potentially arriving before multi-mode.

- Co-packaged optics: Linear-pluggable optics, like co-packaged and linear optics, are gaining traction, but vendor interoperability issues need resolution.

- AI-driven communication: Growth of AI models is driving demand for high-speed communication in large AI factories.

- Fiber requirements for AI racks: Current AI racks have 72 GPUs, with future racks requiring around 1500 fibers per rack and millions of fibers for 100k GPU clusters. MMC16 connectors are a viable solution for delivering this fiber density.

With over 20 years of experience in the optical fiber industry, Mustafa Keskin is an accomplished professional currently serving as the Data Center Solution Innovation Manager at Corning Optical Communications in Berlin, Germany. He excels in determining architectural solutions for data center and carrier central office spaces, drawing from industry trends and customer insight research.

Please note: The opinions expressed in Industry Insights published by dotmagazine are the author’s or interview partner’s own and do not necessarily reflect the view of the publisher, eco – Association of the Internet Industry.